By Evans Yang, VP, PUFsecurity

Artificial intelligence will play a pivotal role in the future of information security. By combining big data, deep learning, and machine learning, AI give machines life; they can imitate human learning, replicate work behaviors, and bring new ways to operate businesses. However, AI assets are very valuable, making them the target of hackers. Once a hacker has an opportunity to discern how the AI model is trained and operated, the model can be easily manipulated. For instance, hackers can destroy the data in the training model, causing major disruption in both the supply and demand side of the entire AI system. Therefore, this article will discuss how to strengthen the security of AI systems from the structure of the AI hardware device, to the security requirements, solutions, and etc. To do this, we will use PUFsecurity’s hardware root of trust module, PUFrt, as an example to help readers understand how combining AI application architecture and physical unclonable function (PUF) can benefit hardware security technology.

Introducing the AI Hardware Device Architecture and Manufacturing Process

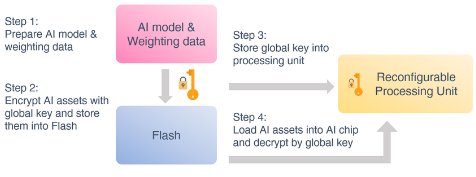

The main structure of an AI application device can be roughly divided into three sections: AI application algorithm model and parameters (soft know-how), storage unit (storage), and AI computing unit (AI accelerator). The storage unit usually uses flash memory to store AI application algorithm models and parameters, while the AI operation unit (AI chip) is used to perform operations on the AI algorithm model. From product design, to manufacturing, to implementing market applications, the main process will include:

- Preparing AI model and parameters

- Encrypting and protecting the AI model and parameters used for implantation and storing it in the storage unit

- Writing the key and trust certificate used for the encryption on the AI chip, which will be used as the key and authentication information required for decryption when the program starts.

- Once the AI application starts, the encryption algorithm model and parameters stored in the flash memory will be loaded onto the AI chip. After this is completed, the algorithm model and parameters are decrypted by the pre-implanted key and authentication information. Then, the AI chip will execute the decrypted AI algorithm model and parameters to start the AI application function.

Figure 1: AI device architecture and manufacturing process

The security requirements of AI applications

There are many aspects to the security requirements of AI applications. One is to protect important assets in AI design such as big data, algorithms, etc. The second is to protect AI machines from attacks through malicious third parties that secretly disrupt the learning mechanism or behavior of the machine when its performing deep learning or executing tasks. The third is to protect the relevant information involved in the AI technology such as personal medical privacy, communications, and other consumer information.

When discussing possible security gaps in AI applications, it is important to consider it from the perspective of the system architecture and discuss the issued related to the integrity of the AI chip hardware. If the chip (AI accelerator) that performs AI algorithm calculations experiences any integrity issues, such as loading a tampered firmware, it may lead to unauthorized functions or malicious attack commands being placed on AI chips. When this happens, it disrupts the operations because the original design function can be hijacked and controlled by the attacker. The original mechanism will therefore be unable to run effectively due to tampering, which can lead to a whole slew of security problems.

Why do people suggest including hardware root of trust to the design of AI chips when mitigating the concerns surrounding hardware integrity?

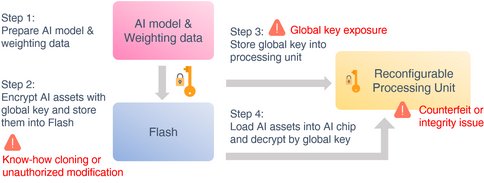

In the absence of a hardware root of trust, the chip’s protection mechanism will be relatively easy to bypass. As a result, it will be unable to effectively protect the keys and trust certificates required for encryption and decryption. This leads to key leakage or inability to resist anti-assembly translation of reverse engineering. This results in giving malicious third parties a chance to obtain AI inference models. Consequently, the intellectual property (AI know-how) of a company will be exposed, which leads to a risk of being illegally copied and eventually, huge commercial losses. Furthermore, the attacker can also tamper with inference model which causes errors in the AI operation and user losses. For example, if the active safety function of ADAS (advanced driver assistance systems) suddenly fails during a drive, the driver can easily misjudge the situation and end up in a car accident.

Figure 2: The security risks of AI applications

How to effectively improve AI application security and asset protection

It is important to consider the needs of AI application security and the protection of company assets when developing AI products. In addition to the performance of the AI algorithm model, the AI chip itself must have built-in hardware root of trust that is used simultaneously with other security measures to protect the key, strengthen the algorithm’s storage, and ensure the originality of the chip design. That way, it will be easier to prevent the falsification and theft of the AI algorithm model and avoid the loss of business and intellectual property.

The executable security deployment are as follows:

1- Protecting AI chips with built-in PUF-based root of trust

PUFrt is a PUF-based security root of trust solution designed for chips. It uses a random number generated by the physical unclonable function (PUF), which acts as a chip fingerprint and unique identification code (UID). In addition, the original password derived from PUF can also be used in storing the secret key through encryption. Furthermore, PUFrt can be used on algorithms as a true number generator to enhance security functions. In terms of AI applications, after PUFrt is built into AI chips, the chip fingerprint derived from PUF can be used as a secure root of trust. It can also protect and store the global key used for the encryption of AI algorithms.

2- Re-encrypting the AI algorithm model and parameter data

While operating the AI production supply chain, the global key is used to encrypt and protect the AI model and parameter data, which is then written in flash memory for storage. Security concerns often arise due to the insecure key storage function on the chip and the lack of security in the entire supply chain operation which can result in the leakage of the global key.

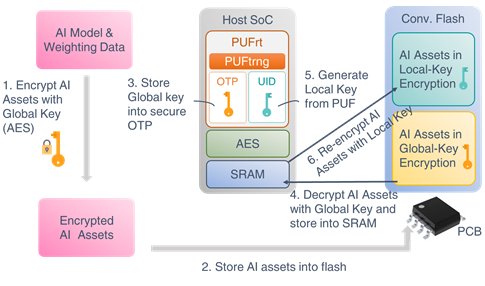

When AI chips and external flash memory are paired for the first time, the local key generated by the PUF inside the chip is used to re-encrypt the AI algorithm model and parameter data stored in the flash. At the same time, the global key that the customer used to originally encrypt AI assets will also be written onto the OTP and stored securely. The process is as follows:

- Under a secure environment, the client encrypts the AI asset with a global key

- The data encrypted by the global key will be written into the flash memory and installed on the circuit board

- At the same time, the global key is also written onto the OTP and stored securely

- The system will use the global key to decrypt the AI assets and temporarily store them in SRAM

- The PUF (UID) in PUFrt is then used to generate the local key

- The unique PUF-based local key in each chip system will be used to re-encrypt and protect AI assets in the flash

Figure 3: The operation of AI system with embedded PUFrt

The purpose of the approach mentioned above is to avoid security risks that may result from externally injected keys. Protecting the data with a local key generated inside the chip can lower the chances of the key being revealed during the injection process. Using the unique PUF “fingerprint” of each chip as the encryption key of the system can also prevent the internal secrets in the flash memory from being stolen or forged.

In a competitive situation where AI applications are booming and companies are investing in the development and protection of intellectual properties (i.e. AI chip design, AI algorithm models, and parameters), security protection should not be overlooked. If the AI chip or algorithm models and parameters are maliciously tampered with or stolen, it will jeopardize the rights of both the AI developers and users. This can lead to doubts and mistrust in the security of user applications, leading to huge losses for companies.

The author of this article provides a proposed hardware security solution that is more secure than regular software protection. Physically Unclonable Function (PUF) is a concept that is similar to a unique chip fingerprint. When each device has a unique password that is difficult for outsiders to steal, it is easier to prevent large scale remote cracking of these devices. For many, AI devices installed in public spaces is an easy target. Therefore, it is undoubtedly important to protect them with hardware mechanisms. Not only can ensure that AI application products can be safely executed in various environments, it also ensures that the applications function properly, provide users with a good experience, and guarantees the trust in a company’s intellectual property assets and competitiveness.

For more information about PUFsecurity company and PUFrt product introduction, please visit: www.pufsecurity.com

If you wish to download a copy of this white paper, click here