AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications

Designed to provide quick and seamless deployment into customer hardware, TimbreAI provides optimal performance within the strict power and area application requirements of today’s advanced audio devices. TimberAI’s unified compute pipeline architecture enables highly efficient hardware scheduling and advanced memory management to achieve unsurpassed end-to-end low-latency performance. This minimizes die area, saves power, and maximizes performance.

View AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications full description to...

- see the entire AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications datasheet

- get in contact with AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications Supplier

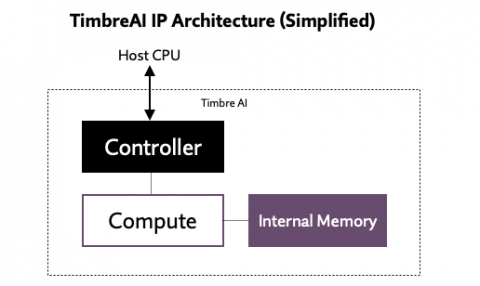

Block Diagram of the AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications

AI Inference IP

- Highly scalable inference NPU IP for next-gen AI applications

- AI accelerator (NPU) IP - 16 to 32 TOPS

- AI accelerator (NPU) IP - 1 to 20 TOPS

- AI accelerator (NPU) IP - 32 to 128 TOPS

- AI Inference IP. Ultra-low power, tiny, std CMOS. ~ 100K parameter RNN

- RISC-V-based AI IP development for enhanced training and inference