AI Processor Accelerator

The secret to our success lies in our efficient utilisation of compute elements and intelligent memory reuse through reinforcement learning based software, ensuring a seamless ow of data to the compute engines with minimal cycles unused. With Gyrus's compilers and software tools, you can effortlessly port any neural network to our hardware accelerator, unlocking exceptional efciency, even with substantial activations and weights.

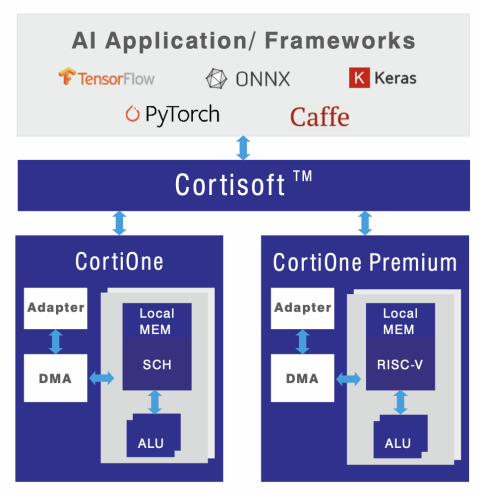

Our compilers streamline hardware conguration, reducing SOC complexity and power consumption while enabling AI algorithms to run smoothly on edge devices. The scheduler is a neural scheduler search based on Reinforcement learning. With a cycle-accurate C-Model, we create a Digital Twin of the NNA IP, ensuring long-term model deployment efciency. Elevate your edge device capabilities with Cortisoft from Gyrus!

View AI Processor Accelerator full description to...

- see the entire AI Processor Accelerator datasheet

- get in contact with AI Processor Accelerator Supplier

Block Diagram of the AI Processor Accelerator IP Core

AI Accelerator IP

- AI accelerator (NPU) IP - 16 to 32 TOPS

- AI accelerator (NPU) IP - 1 to 20 TOPS

- AI accelerator (NPU) IP - 32 to 128 TOPS

- Deeply Embedded AI Accelerator for Microcontrollers and End-Point IoT Devices

- Performance Efficiency Leading AI Accelerator for Mobile and Edge Devices

- High-Performance Edge AI Accelerator