AI processor (NPU) IP

Featuring a scalable architecture with over 40 TOPS computing power,

capable of handling a wide range of inference tasks.

・Multicore Parallel Processing:

A multicore design that can concurrently process multiple diverse models.

・Mixed Precision Computation:

Adopts a mixed precision computation approach to balance inference

performance and accuracy.

・Extensive Data Format Support:

Supports a broad range of data formats including INT4/8, FP4/FP8/FP16.

・Comprehensive Model Support:

ONNX support, enabling a diverse set of AI models.

・Edge Computing Optimization:

Optimized for edge computing with high PPA efficiency.

View AI processor (NPU) IP full description to...

- see the entire AI processor (NPU) IP datasheet

- get in contact with AI processor (NPU) IP Supplier

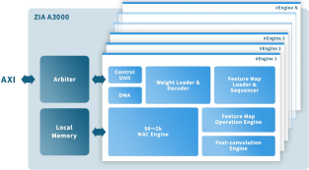

Block Diagram of the AI processor (NPU) IP IP Core

AI IP

- RT-630-FPGA Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof

- NPU IP for Embedded AI

- Tessent AI IC debug and optimization

- AI accelerator (NPU) IP - 16 to 32 TOPS

- Complete Neural Processor for Edge AI