Ultra low-power crystal-based 32 kHz oscillator designed in TSMC 22ULL

Highly scalable performance for classic and generative on-device and edge AI solutions

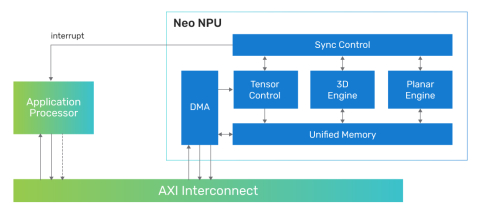

The Neo NPUs target a wide variety of applications, including sensor, audio, voice/speech, vision, radar, and more. The comprehensive performance range makes the Neo NPUs well-suited for ultra-power-sensitive applications such as IoT, hearables/wearables, high-performance systems in AR/VR, automotive, and more.The product architecture natively supports the processing required for many network topologies and operators, allowing for a complete or near-complete offload from the host processor. Depending on the application’s needs, the host processor can be an application processor, a general-purpose MCU, or a DSP for pre-/post-processing and associated signal processing, with the inferencing managed by the NPU.

View Highly scalable performance for classic and generative on-device and edge AI solutions full description to...

- see the entire Highly scalable performance for classic and generative on-device and edge AI solutions datasheet

- get in contact with Highly scalable performance for classic and generative on-device and edge AI solutions Supplier

Block Diagram of the Highly scalable performance for classic and generative on-device and edge AI solutions

AI IP

- RT-630 Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- RT-630-FPGA Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- NPU IP for Embedded AI

- RISC-V-based AI IP development for enhanced training and inference

- Tessent AI IC debug and optimization

- NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof