NPU

AI data-processing workloads at the edge are already transforming use cases and user experiences. The third-generation Ethos NPU helps meet the needs of future edge AI use cases.

The Ethos-U85 offers support for transformer-based models at the edge, the foundation for newer language and vision models, scales from 128 to 2048 MAC units, and is 20% more energy efficient than Arm Ethos-U55 and Arm Ethos-U65, enabling higher performance edge AI use cases in a sustainable way. Offering the same toolchain as previous Ethos-U generations, partners can benefit from seamless migration and leverage investments in Arm-based machine learning (ML) tools.

View NPU full description to...

- see the entire NPU datasheet

- get in contact with NPU Supplier

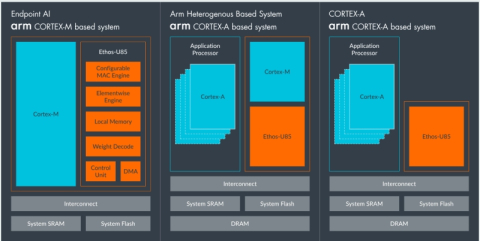

Block Diagram of the NPU IP Core