NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof

NeuPro-M is a highly power-efficient and scalable NPU architecture with an exceptional power efficiency of up to 350 Tera Ops Per Second per Watt (TOPS/Watt).

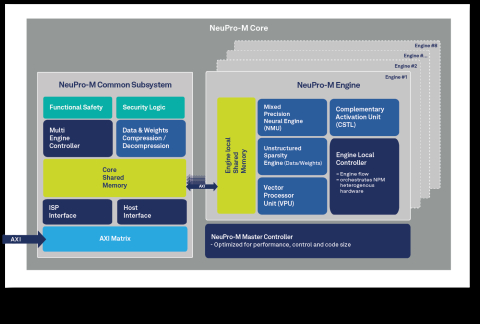

NeuPro-M provides a major leap in performance thanks to its heterogeneous coprocessors that demonstrate compound parallel processing, firstly within each internal processing engine and secondly between the engines themselves.

Ranging from 4 TOPS up to 256 TOPS per core and is fully scalable to reach above 1200 TOPS using multi-core configurations, NeuPro-M can cover a wide range of AI compute application needs which enables it to fit a broad range of end markets including infrastructure, industrial, automotive, PC, consumer, and mobile.

With various orthogonal memory bandwidth reduction mechanisms, decentralized architecture of the NPU management controllers and memory resources, NeuPro-M can ensure full utilization of all its coprocessors while maintaining stable and concurrent data tunneling that eliminate issues of bandwidth limited performance, data congestion or processing unit starvation. These also reduce the dependency on the external memory of the SoC which the NeuPro-M NPU IP is embedded into.

NeuPro-M AI processor builds upon Ceva’s industry-leading position and experience in deep neural networks applications. Dozens of customers are already deploying Ceva’s computer vision & AI platforms along with the full CDNN (Ceva Deep Neural Network) toolchain in consumer, surveillance and ADAS products.

NeuPro-M was designed to meet the most stringent safety and quality compliance standards like automotive ISO 26262 ASIL-B functional safety standard and A-Spice quality assurance standards and comes complete with a full comprehensive AI software stack including:

NeuPro-M system architecture planner tool – Allowing fast and accurate neural network development over NeuPro-M and ensure final product performance

Neural network training optimizer tool allows even further performance boost & bandwidth reduction still in the neural network domain to fully utilize every NeuPro-M optimized coprocessor

CDNN AI compiler & runtime, compose the most efficient flow scheme within the processor to ensure maximum utilization in minimum bandwidth per use-case

Compatibility with common open-source frameworks, including TVM and ONNX

The NeuPro-M NPU architecture supports secure access in the form of optional root of trust, authentication against IP / identity theft, secure boot and end to end data privacy.

View NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof full description to...

- see the entire NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof datasheet

- get in contact with NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof Supplier

Block Diagram of the NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof