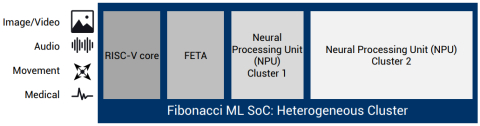

Ultra-low-power AI/ML processor and accelerator

NPU Clusters:

• Optimized for spatial neural networks (e.g. CNNs, ResNets, MobileNets)

• Sparsity exploitation

• Peak MAC performance: 160 GOPS

FETA Cluster:

• Optimized for temporal neural networks (e.g. RNNs like LSTM or GRU)

• Smart temporal feature extraction engine

View Ultra-low-power AI/ML processor and accelerator full description to...

- see the entire Ultra-low-power AI/ML processor and accelerator datasheet

- get in contact with Ultra-low-power AI/ML processor and accelerator Supplier

Block Diagram of the Ultra-low-power AI/ML processor and accelerator

Video Demo of the Ultra-low-power AI/ML processor and accelerator

Emotion detection running from a coin cell battery

AI IP

- RT-630-FPGA Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof

- NPU IP for Embedded AI

- Tessent AI IC debug and optimization

- AI accelerator (NPU) IP - 16 to 32 TOPS

- Complete Neural Processor for Edge AI