Ultra Low Power Edge AI Processor

In the AI / Deep Learning system consisting of a large-scale cloud system and an enormous number of edge side IoT devices, distributed processing is expected to spread widely. While learning processing will be done on cloud side due to the required tremendous amount of data, it is expected that the inference processing will be carried out solely on edge size, meeting the mandatory requirements of real-time, safety, privacy and security.

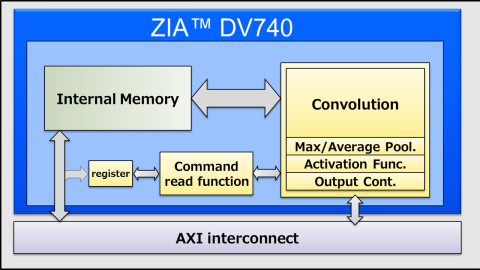

In case of inference processing in edge side, low power, high performance and low cost are must have features to be a competitive AI processor. ZIA™ DV740 is configurable processor IP that use DMP’s advanced processor and hardware optimization technologies. ZIA™ DV740 allows optimization of both performances and hardware size for dedicated inference processing. Since ZIA™ DV740 offers to realize inference processing meeting optimal for each edge application, including low power consumption and small size, DMP will strongly promote the use of your AI / Deep Learning.

View Ultra Low Power Edge AI Processor full description to...

- see the entire Ultra Low Power Edge AI Processor datasheet

- get in contact with Ultra Low Power Edge AI Processor Supplier

Block Diagram of the Ultra Low Power Edge AI Processor

AI IP

- RT-630-FPGA Hardware Root of Trust Security Processor for Cloud/AI/ML SoC FIPS-140

- NPU IP family for generative and classic AI with highest power efficiency, scalable and future proof

- NPU IP for Embedded AI

- Tessent AI IC debug and optimization

- AI accelerator (NPU) IP - 16 to 32 TOPS

- Complete Neural Processor for Edge AI