Alberto Duenas, NGCodec Inc.

INTRODUCTION

The new High Efficiency Video Coding (HEVC) video compression standard results from the work of the latest joint project of the ISO/IEC Moving Picture Experts Group (MPEG) and ITU-T Video Coding Experts Group (VCEG) standardization groups, performed under the name of the Joint Collaborative Team on Video Coding (JCT-VC) (ITU-T and ISO/IEC (1)). This follows similar work done by the previous cooperation under the Joint Video Team (JVT) that delivers the common specification ITU-T H.264/ MPEG-4 AVC (ITU-T and ISO/IEC (Advanced Video Coding for Generic Audio-Visual Services, May 2003)). The first version of HEVC was completed in January 2013 and was published by ITU and ISO/IEC respectively.

To date, H.264/AVC has been an extremely successful standard, which has been used very effectively on most recent applications, from broadcasting and internet video to optical discs, mobile devices, camcorder and video communication.

Nevertheless, some of the new applications and the proliferation of existing ones, required the creation of a new video compression format. One that addressed the need for greater compression efficiency, larger resolutions and more optimised implementation using current technologies.

Following previous MPEG and ITU-T standards, HEVC only specifies the decoding process and the syntax, this guarantees interoperability between different implementations and allows for great flexibility in implementation. This flexibility allows encoding products to exhibit increased performance with time and competitiveness, as better underlying technology becomes available, algorithms more mature and better understood.

DESCRIPTION OF TIMELINE AND DEVELOPMENT PHASES

The work to create new technology for video compression was started soon after the completion of the H.264/AVC project and different activities were initiated by MPEG and VCEG. VCEG very actively started extending the H.264/AVC joint reference software model (JM) with new techniques and ideas into what was defined as the key technology areas software model (KTA). In parallel, MPEG organized several workshops on new ideas for video compression and in 2009 issued a "call for evidence" (ISO/IEC (3)), the response to this showed that there were enough new techniques and ideas to justify the launch of a new standardization effort with the goal of obtaining substantial compression efficiency gains. In January 2010 the JCT-VC was created by MPEG and VCEG and a joint call for proposals on video compression technologies (CfP) (ITU-T and ISO/IEC (4)) was issued.

The first JCT-VC meeting was conducted in Dresden days after the eruption of the Eyjafjallajökull volcano in Iceland, which did not stop the intensive work to evaluate the 27 responses to the CfP. Many of the proposals showed very significant gains compared with the H.264/AVC reference, and based on the work of several of the best performing systems, a “test model under consideration†(TMuC) (ITU-T and ISO/IEC (5)) was proposed. An associated software model was created after the meeting that represented the techniques available in the TMuC. During the second meeting it was identified that the TMuC included redundant techniques and a process to test each of the elements within it was initiated; this was mostly conducted during the Tool Experiment 12 (McCann (6)). After evaluating the results from this work during the third meeting in October 2010, a first version of the working draft of the HEVC specification was produced in October 2010 (Wiegand et al (7)). This contained only the techniques that proved to offer substantial advantages.

During the following nine meetings more techniques were evaluated, in addition to a large number of simplifications and improvements that were included in the standard once their validity had been established. Finally, after twelve meetings, in January 2013, the work on version 1 of the specification was concluded with the 10th version of the working draft of the HEVC specification (Bross et al (8)). This was submitted for approval to the parent standardization bodies.

It is important to note the dimension of this project, compared with previous collaborative projects (like MPEG-2/H.262 and H.264/AVC) and potentially to other video compression activities (like VC-1, VP8 and VP9); in addition to the extensive work done to be able to create the responses for the CfP. More than 200 experts physically participated at each meeting (Sullivan and Ohm (9)), where up to 1000 openly-distributed documents were reviewed and acted upon in a collaborative manner. This is much larger than in the previous collaborative project between VCEG and MPEG that delivered H.264/AVC. This was a massive endeavour that required very complex coordination, leadership and supporting technology and it delivered extremely satisfactory results on the specified timeline.

It may be concluded that, as video compression technology becomes more complex, it becomes harder to achieve positive results. In future, projects will most likely require far greater resources in order to achieve comparable results. If these are made available, a new video compression standard could be delivered in a similar timeframe to that in which MPEG-2/H.262, H.264/AVC and HEVC were created.

ALGORITHM DETAILS

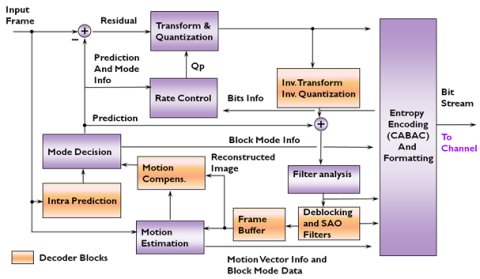

The foundation of the design used in HEVC is the same as previous standards such as MPEG-2/H.262 or H.264/AVC. This is proving that the established and well-understood concept of a block-based hybrid motion-compensated predictive structure is a good foundation for further improvements and that it is very difficult to deliver this level of compression efficiency with radically different approaches. The main structure of an HEVC encoder can be seen on Figure 1.

Similarities with AVC/H.264

There are extensive similarities between HEVC and H.264/AVC designs and we could consider HEVC an extension of the previously available compression principles. It shares with H.264/AVC: the high-level coding structure, most of the principles of the high-level syntax, it is based on integer transforms, it uses similar intrapicture and interpicture prediction methods, quantizer (including scaling matrices), deblocking filtering process, quarter-pel interpolation, motion-compensation principles, weighted prediction, hierarchical picture structures and entropy encoder.

Figure 1– HEVC Encoder Architecture

Additional tools and modifications introduced in HEVC

Although HEVC shares many similarities and tools with previous video compression standards, it also adds or extends them in multiple ways. This section will describe the main differences compared with the H.264/AVC specification.

Picture partitioning, block coding and transform

The first fundamental difference is that instead of using 16x16 macroblocks like previous standards, HEVC used a tree structure and quadtree-like signalling. The picture is partitioned into coding tree units (CTUs), where each CTU consists of a luma CTB and the corresponding chroma CTBs and its syntax elements. The size of a luma CTB can be chosen as 64x64, 32x32 or 16x16 samples. The encoder can decide on the size to be used to trade off compression efficiency with other factors.

Figure 2– Picture partitioning

The CTUs may be split into coding units (CU), each CU consists of one luma CB and two chroma CBs and the associated syntax. A CTB may contain only one CB or may be split into multiple CBs in a recursive manner, up to a minimum CB size of 8×8 luma samples. Then a CU can be partitioned once into prediction units (PUs) and transform units (TUs). It is at the CU level where the encoder decides the type of prediction to be used: intrapicture or interpicture. PB sizes from 64×64 to 4×4 samples are supported. In addition, for interpicture, each PU contains one or two motion vectors for uni-predictive or bi-predictive coding respectively.

After the prediction is performed, a square transform is used to code the residual. It is possible to use the same size transform as the CB or to spilt it into 4 smaller TBs. HEVC allows for the usage of DCT-like integer transform sizes from 4x4 to 32x32. In addition for the 4x4 intra predicted CUs, it is possible to use an alternative DST-like integer transform. There are some constraints to take into account, interpredicted PUs can be split into two or four smaller PUs, but intrapredicted PUs are not allowed to be split into two smaller PUs. In addition for interpredicted CUs, 4x4 PU size is not allowed and 8x4 and 4x8 are limited to the use of uniprediction in order to reduce memory bandwidth. This is the main contributor to the compression efficiency gains that HEVC encoders can deliver.

Intra prediction

Compared to the 8 directional Intrapicture prediction modes in H.264/AVC, HEVC supports 33 directional modes plus planar and DC prediction modes.

The range of Intrapredicted PUs sizes are from 4x4 to 32x32.

For both chroma CBs, a single intra prediction mode is selected. It specifies using the same prediction mode that was used for luma or instead using a horizontal, vertical, planar, left-downward diagonal or DC prediction mode. The intra prediction mode is applied separately for each transform block (TB).

Figure 3 -Example of possible PU splittings for skip, intra and inter prediction

Inter prediction

HEVC enhanced the interprediction method, employing modifications to obtain several most probable candidates, from the data of adjacent PBs and the reference pictures.

There is a new merge mode for MV coding that is similar to the direct mode on H.264/AVC which does not need to transmit the motion vector information. Those are created in the decoder from a candidate list of motion parameters.

In addition, there is an advanced motion vector prediction (AMVP) method, which includes the derivation of several most probable candidates based on data from adjacent PBs and the reference picture.

Entropy coding (CABAC)

Similar to H.264/AVC, Context-Adaptive Binary Arithmetic Coding (CABAC) is used for entropy coding but coefficient coding has been improved, leading to greater efficiency for larger transform sizes and a much higher throughput than before.

In-loop filtering, deblocking and sample adaptive offset

HEVC includes a deblocking filter similar to that used in H.264/AVC but simpler and easier for parallel processing. In addition, HEVC adds a new sample-adaptive offset (SAO) filtering technique executed after the deblocking process and within the interprediction process. The SAO processes the entire picture as a hierarchical quadtree, it classifies the reconstructed samples into different classes and reduces the distortion by adding a separate offset for each one. These techniques deliver very substantial subjective gains.

Tiles and wavefront parallel processing

HEVC introduces two new techniques to enable the use of parallel processing architectures for encoding and decoding in addition to using slices as in previous standards, using slices leads to a significant penalty in compression efficiency. This is because no prediction is performed across slice boundaries and to the size of the slice headers (now comparatively larger). Tiles are rectangular regions of the picture that are independently decodable; they do deliver greater compression efficiency compared with slices as they do not have a slice header. Alternatively with wavefront parallel processing (WPP), a picture or slice is divided into rows of CTUs, the encoding or decoding of each row can be started after the data needed for prediction (and for the adaptation inside CABAC) become available from the previous row.

There are some restrictions, as tiles and WPP cannot be combined together and in the minimum tile size but a slice can contain multiple tiles and a tile can contain multiple slices.

Figure 4–Slices, tiles and WPP

Transform skipping

The transform skip mode defines a skip in one or both spatial dimensions in which the transform is computed for size 4x4, it generally improves the compression efficiency for special types of video sequences, and especially for computer-generated graphics.

Scanning methods

Compared to previous specifications, HEVC does not use the traditional zigzag scanning because it does not deliver optimum results whenever the scan moves from one diagonal to another. In addition to the diagonal, horizontal and vertical, scans may also be applied in the intra case for 4×4 and 8×8 TBs as seen in figure 5.

Figure 5-Scan patterns

Simplification of the interlaced tools

HEVC supports the coding of interlaced video sequences but compared with H.264/AVC this has been greatly simplified and achieved with reduced complexity. HEVC does support picture-adaptive field and frame coding by transmitting 5 flags in the optional SEI messages sent in each picture.

Additional simplifications

HEVC also has simplified a number of areas of the design, leading to a number of complexity reductions that help encoder implementations. Among these are the following items: reduction of the error resilience tools (i.e. no FMO), fewer picture order count types, reduced memory bandwidth (as there are limitations on the interpicture prediction for the smaller blocks), simpler and higher throughput CABAC, simpler deblocking design, removal of the implicit-weighted prediction mode and removal of a second entropy engine,

HARDWARE IMPLEMENTATION CHALLENGES

In this section we are going to cover some of the challenges associated with the implementation of an UHDTV-capable HEVC encoder supporting the Main 10 Profile. A hardware implementation can have much more flexibility compared with software-based implementation. This is because VLSI technology has improved very substantially and can deliver the technology required to extract most of the benefits on the HEVC specification, leading to better visual quality, lower power consumption and lower cost. Hardware does, however, need a longer development time and results in higher R&D cost.

Traditional encoder implementation challenges

Some of the challenges are present with any video compression encoder:

Motion estimation

This is traditionally one of the main areas of work for encoder implementations as its design greatly influences the complexity and compression efficiency of the final encoder product. This challenge is aggravated, in the case of HEVC, because of the combination of a larger search areas and the requirement to perform complex searches. Traditionally this is an area where the complexity of implementations grows over successive product generations and allows for great differentiation, leading to larger search areas being covered and with greater precision and accuracy.

Mode decision

A real-time encoder needs to be able to select the best possible coding mode for a given block of the picture. In many cases this needs to be done by estimating multiple options and selecting a priori the mode that is expected to deliver the lower cost. It is the goal of the mode decision to select the best mode to deliver the lowest distortion and with the lowest rate, from all the possible modes. This is quite complex in HEVC as we need to decide between using inter or intra modes for a given CU; decide the intra prediction mode; decide the type of split for inter prediction; decide the transform size and the partitioning for the CU. And there are many options available. In addition, the encoder needs to comply with the bit-rate constraints and this introduces a new level of complexity.

Reduction on Memory Bandwidth

Memory bandwidth can be very substantial with large picture resolutions, so it is an important area to consider. Similar techniques to those used in H.264/AVC, can also be used in HEVC and will deliver similar results, these include caching of the data, compression of reference pictures and data flow techniques.

Low delay

The importance of low delay (less than one frame) has grown in recent years as there are a number of important applications that require this. This has led to a new set of implementation constraints that introduce new demands on the encoder. HEVC offers the possibility of using sub-frame buffering models to help with this problem (Kazui and Duenas (10)), but this an area where encoders can deliver very different performance.

HEVC specific encoder implementation challenges

The development of hardware HEVC encoders encounter a new set of challenges because of the introduction of new techniques and new trade-off to deal with them:

High-level parallelism techniques

HEVC allows for 3 types of high-level parallelism techniques to be used and they deliver various different alternatives to select from. Different applications and implementations will select differently from these options and will result in different trade-offs in terms of: level of parallelism, size of the design, the clock frequency used to implement the design, memory utilization/bandwidth and compression efficiency. WPP is preferable in cases where compression efficiency is most important; in contrast, tiles have important memory advantages for multichip approaches and it is a natural extensions of the traditionally used slices.

Quadtree-based block partitioning

As one of the main goals for HEVC was to be able to process UHDTV pictures efficiently, large 64x64 prediction units can be used, but within the algorithm, these can be partitioned down to the minimum size of 4x4, this delivers very important compression efficiency gains. This implies the use of a quadtree-based approach which a large challenge in the implementation of a HEVC encoder. It needs to be efficient in the way it is able to select between a very extensive number of options while trying to minimize the complexity. This leads to a situation where different HEVC encoders may trade off compression efficiency for a limit on the size of the CTB in order to reduce the overall complexity or reduce the number of CUs being utilized.

Interpicture prediction complexity

The complexity of the interpicture prediction is increased with larger blocks. Block partitioning and the complexity of the quarter-pel filter has been increased, as it contains 8 taps for luma. The motion vector prediction method has also been increased in complexity by using more candidates and the inclusion of a new merge mode that extends the direct and skips modes available in H.264/AVC.

Intrapicture Prediction complexity

Conceptually, HEVC has a similar prediction scheme than H.264/AVC but there are now a much larger number of directions involved and larger blocks can be used as an option. It is therefore necessary to consider the implementation complexity of this type of prediction more seriously than has been required in the past.

Support for 10-bit bit-depth

The main 10 Profile supports 10-bit bit-depth. This is more important for UHDTV and 1080p, than has been the case with lower resolution images, as these services are aiming to improve the color representation. The support for 10 bits has important implications, as the internal processing, internal memory and external memory bandwidth all need to accommodate the larger precision.

Transforms and quantization

The larger transform sizes including 32x32 are much greater than the H.264/AVC 8x8 but this is not of significant complexity for most hardware implementations.

Entropy coding (CABAC)

During the development process of HEVC it was decided to use only one entropy coding method and CABAC was selected as it offered significant compression gains compared to CAVLC. This led to the development of a high-throughput CABAC entropy codec scheme which could deliver the necessarily bit-rates required by some applications (Sole et al (11)). The new engine reduced substantially the number of bins that need to be regularly coded, As the rest of the bins are coded in by-pass mode this leads to a much simpler design and allows support for higher bit-rates or lower frequencies and reducing the overall complexity of the entropy encoder.

SAO parameters

The encoder needs to decide the SAO parameters to use and this leads to an approach similar to that employed for mode decision but which selects between all the available options and can address very substantially some of the compression artefacts.

Visual quality challenges

There are a number of new techniques that require new visual optimizations in order to produce a broadcast quality encoder. Among these are the support for larger blocks that might produce banding and blocking artefacts in the decoded pictures. The new sample-adaptive offset and deblocking filtering mechanisms, generally address some of the visual artefacts but they can produce new ones. Also, the simplified support for interlaced pictures can produce artefacts and loss in compression efficiency if not applied correctly.

COMPRESSION GAINS COMPARED WITH H.264/AVC

As previously noted one of the main goals of the HEVC standardisation effort was to achieve substantial compression efficiency gains compared with previous technologies. It is important to note that most of the quality tests being performed are done using complex software models that do not have any real-time constraints. These are representative of the long-term capabilities of the HEVC standard and its potential in future implementations.

There have been a number of tests conducted comparing the HEVC main profile reference software (HM) with the equivalent H.264/AVC high profile reference software (JM). These tests show the potential gains to be obtained by products based on HEVC compared with H.264/AVC. Using the test sequences used during the standardisation process, that include resolutions from 240p to 2160p, it was noted (Ohm et al (12)) that the HEVC reference software showed PSNR gains of 40.3% and 35.4% compared to the H.264/AVC reference software for interactive and entertainment applications respectively. In a more recent test (Sullivan and Xu (13)) using HM-10.0 it was reported that HEVC can gain about 34.3% for the entertainment configuration and about 36.8% for the interactive configuration when compared with H.264/AVC. It is important to note that the compression efficiency gains depend on the type of picture being used, leading to lower gains for Intra only pictures, for lower resolutions and higher bit-rates. In a recent test with UHDTV material (Andrivon et al (13)) it was noted that for this resolution for the I-frame only configuration, HEVC can save about 25% in bit-rate and about 45% for the entertainment and interactive configurations when compared with H.264/AVC.

It is important to note that these tests were conducted using rate-distortion objective measures. It has been noted in many times that HEVC actually performs better in terms of subjective visual quality than when using objective measures. As an example (Ohm et al (12)), based on a older version of the HEVC reference software (HM 5.0), it was identified that for entertainment applications HEVC offers around a 50% bit-rate saving compared with H.264/AVC HP for the same perceptual quality over a wide range of video sequences.

FUTURE TRENDS

Short-term evolution of HEVC standardization process

The JCT-VC currently continues its work on higher bit-depth, improved color sampling and scalable future extensions, better support for screen content and visually lossless techniques, some of this work is expected to be ratified in standards during 2014.

Evolution after the HEVC standardization

The process of delivering a new video compression design will happen in a gradual manner. It will take a substantial amount of time to achieve significant gains again but it is the general belief that this will be possible in the future. The currently used methodology is well-established and it has delivered positive results for more than 20 years. There are currently a number of techniques that needs further work and could be investigated in detail; among those we could include: even larger blocks and transforms, more complex intrapicture prediction methods (including short distance intra prediction), more complex intrapicture prediction methods (considering more motion vector candidates), additional filtering processes, prediction of luma and chroma data from each other, dictionary based coding, alternative transforms and alternative entropy coding methods.

REFERENCES

1. ITU-T and ISO/IEC, 2010. Terms of Reference of the Joint Collaborative Team on Video Coding Standard Development, document ITU-T VCEG-AM90 and MPEG N11112.

2. ITU-T and ISO/IEC, 2003. Advanced Video Coding for Generic Audio-Visual Services. International standard ISO/IEC 14496-10 (AVC) and ITU-T Recommendation H.264.

3. ISO/IEC, 2009. Call for Evidence on High-Performance Video Coding (HVC). MPEG document N10553.

4. ITU-T and ISO/IEC. 2010. Joint Call for Proposals on Video Compression Technology. MPEG document N10553.

5. ITU-T and ISO/IEC, 2010. Test Model under Consideration. JCT-VC Document JCTVC-A205.

6. McCann, K., 2010. TE12: Summary of evaluation of TMuC tools in TE12. JCT-VC document JCTVC-C225.

7. Wiegand, T., Han, W.-J., Bross, B., Ohm, J.-R., & Sullivan, G. J., 2010. WD1: Working Draft 1 of High-Efficiency Video Coding. JCT-VC document JCTVC-C403.

8. Bross, B., Han, W.-J., Ohm, J.-R., Sullivan, G. J., Wang, Y.-K., & Wiegand, T., 2013. High Efficiency Video Coding (HEVC) text specification. JCT-VC document JCTVC-L1003.

9. Sullivan, G., & Ohm, J.-R., 2013. Meeting report of the 13th meeting of the Joint Collaborative Team on Video Coding (JCT-VC). JCT-VC document JCTVC-M_Notes_dA.

10. Kazui K. and Duenas A., 2012. Market needs and practicality of sub-picture based CPB operation. JCT-VC document JCTVC-H215.

11. Sole, J., Joshi, R., Nguyen, N., Ji, T., Karczewicz, M., Clare, G., Henry, F & Duenas, A., 2012. Transform Coefficient Coding in HEVC. IEEE Transactions on Circuits and Systems for Video Technology , volume 22, number 12, pp.1765-1777.

12. Ohm, J.-R., Sullivan, G. J., Schwarz, H., Tan, T. K., & Wiegand, T., 2012. Comparison of the Coding Efficiency of Video. IEEE Transactions on Circuits and Systems for Video Technology, volume 22, number 12, pp. 1669-1684.

13. Li, B., Sullivan, G. J., & Xu, J., 2013. Comparison of Compression Performance of HEVC Draft 10 with AVC High Profile. JCT-VC document JCTVC-M0329.

14. Andrivon, P., Salmon, P., & Bordes, P., 2013. Comparison of compression performance of HEVC Draft 10 with AVC for UHD-1 material. JCT-VC document JCTVC-M0166.

In addition the JCT-VC documents are publicly available on http://phenix.int-evry.fr/jct/.