By Mixel

The history of data processing begins in the 1960’s with centralized on-site mainframes that later evolved into distributed client servers. In the beginning of this century, centralized cloud computing became attractive and began to gain momentum becoming one of the most popular computing tools today. In recent years however, we have seen an increase in the demand for processing at the edge or closer to the source of the data once again. We have gone, “Back to the future!"

Figure 1: History of Centralized and Cloud Computing

One of the original benefits of processing in the cloud in was simply to expand beyond the limited capacity of on-site processing. With advancements in AI, more and more decisions can be made at the edge. It is now clear that edge and cloud processing are complementary technologies; they are both essential to achieve optimal system performance. Designers of connected systems must ask, what is the most efficient system partitioning between the cloud and edge?

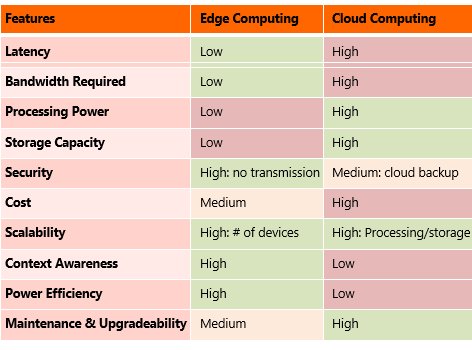

Benefits of Cloud vs. Edge computing

To understand the best way to partition a system, we must first understand the benefits of each. For edge computing, the biggest benefit is low latency. Edge really shines when decision must be made in real-time or near real time. This ability to make decisions in real-time provide other ancillary benefits. With AI, devices can improve power efficiency by reducing false notifications. Processing at the edge also reduces the chance for a security breach due to transmission of raw data to be processed somewhere else. Where connection costs are high or when a connection is limited, processing at the edge may be the only feasible or practical option.

When it comes to the benefits of cloud computing, it really comes down to performance. Cloud processing offers massive computing capability that can’t be replicated at the edge. This is essential for complex machine learning and modeling. Cloud also offers large storage capacity and provides the ability to scale both storage and computing resources, at an incremental cost. Cloud can provide high security once the data is in the data center. Also, it’s generally easier to maintain cloud servers given its centralization.

Table 1: Edge vs. Cloud Computing

Examples of System Designs

Now that we examined the tradeoffs, we can look at some examples of a system designs where both cloud and edge processing can be used.

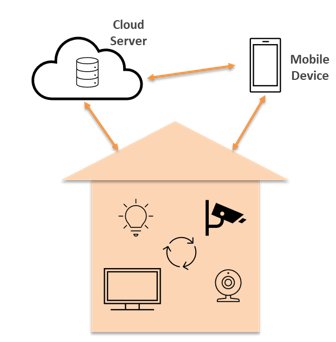

A smart home security system is a notable example, as there is natural segmentation for data processing. Some tasks, such as facial recognition, voice recognition, motion detection while ignoring false alerts, the ability to detect relevant audio inputs such as user commands, glass breaking, or alarms, are best done at the edge. Cloud can still be used for long term retention and machine learning.

Figure 2: Smart home system

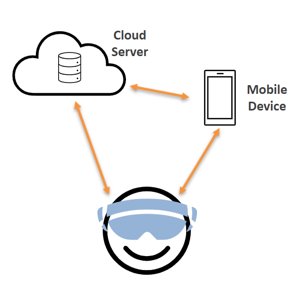

Another example is wearable smart devices. Wearables would utilize edge processing to monitor the environment and identify relevant objects, people, and sounds through small built-in sensors. The wearable would also connect to the cloud and internet via a mobile device giving it access to a huge database that can include contacts, images, global maps, and encyclopedias.

Figure 3: Wearables system

Both above examples similarly benefit from both cloud and edge computing. The cloud is used for anonymized data aggregation and optimization and long-term storage. With better local decision making by the device, you can improve battery life, reduce bandwidth requirements, and improve security. Both system examples, like many IoT devices, would need to collect and integrate data across multiple devices such as sensors, cameras, displays, and microphones. To process all this data in real-time at the edge you need a processor that is capable of processing multiple sensory inputs supporting AI and machine learning at exceptionally low power. Perceive® has done just that with its Ergo® AI processor, which is designed for IoT and edge devices.

Perceive® Ergo® AI Processor

The Perceive Ergo chip is an inference processor designed specifically to meet the needs of power-constrained IoT and edge devices. It can deliver 4 sustained GPU-equivalent floating-point TOPS at 55 TOPS/W. With this type of power, the Ergo processor can process large neural networks using as little as 20 mW and supports a variety of advanced neural networks, all with local processing. The Perceive Ergo chip enables machine learning applications such as video object detection, audio event detection, speech recognition, video segmentation, pose analysis, and other features that create better user experiences. Some of the applications Perceive customers target using the Ergo processor include the smart home security systems and wearable device examples mentioned earlier, as well as video conferencing and portable computing.

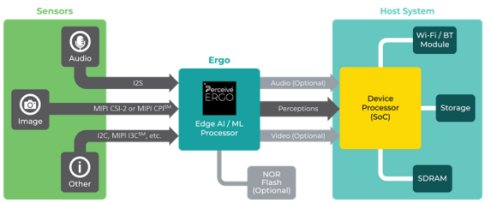

When system designers are looking for an edge processor, it is important that the processor is compatible with the other components of the system. Edge processing demands an interface between the array of cameras, speakers, microphones, and other sensors in the system and the edge processor. This means Perceive needed a set of interface specifications that are compatible with the other components of the system and fit seamlessly into their customers’ design.

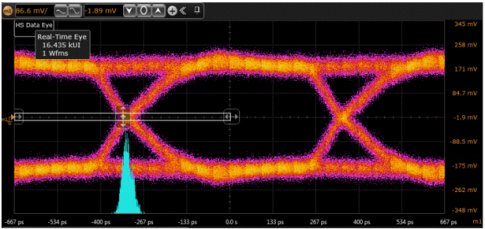

Figure 4: Ergo within a system. Image ©Perceive. Used with permission. All rights reserved.

To understand how the Ergo can be integrated into an edge device, let’s take a look at the block diagram.

Figure 5: Ergo processor block diagram. Image ©Perceive. Used with permission. All rights reserved.

The built-in Imaging Interface section includes 2 MIPI® CSI-2 and 2 CPI inputs and one MIPI CSI-2 output. This support two simultaneous image processing pipelines: one high-performance 4K using two instances of MIPI D-PHYSM CSI-2® RX, and one standard HD, using one instance. Also included is the ability to tunnel video out via the MIPI D-PHY CSI-2 TX, which is useful in many applications, such as security, where an alert can be accompanied by the corresponding audio and video. Underneath this sub-block is the audio interface, which support both mic inputs and a speaker output.

To the right is the CPU System, whose main function is chip management, data flow, and communication with the host processor. Some audio preprocessing is handled by CPU subsystem, such as temporal to spectral conversion. The DSP engine does audio pre- or post-processing such as FFT.

At the top center, the Image Processing Unit processes raw images from the camera, using functions such as scaling, cropping, and color space conversion to make them more readily usable by the neural network fabric.

The brain of the Ergo processor is the neural network fabric in the top right-hand corner. This is where the segmentation, identification, inferences, and other functions take place. The Ergo chip supports several neural network clusters, which allow it to simultaneously run multiple neural networks and support multiple input data types - for example, video and audio at the same time – which enable it to produce higher quality inferences. For example, motion detection accompanied by the sound of breaking glass might trigger a more trustworthy response in a security application than either of those inputs alone. The Neural Network clusters and their SRAM occupy over two-thirds of the area of the chip.

MIPI Enabling Edge Devices

In the case of the Ergo chip, we see several instances of MIPI D-PHY and MIPI CSI-2 to receive and transmit video. While originally designed for mobile applications, MIPI specifications has since been widely implemented in mobile-adjacent applications such as IoT edge devices. Since most edge applications are battery operated, power efficiency is a high priority. Just like in the home security and wearable systems, many IoT devices require the use of cameras, displays, and sensors that have high bandwidth, burst-based and asymmetrical communication requirements. Because of that, MIPI specifications are a natural fit for IoT applications. The specifications are designed to interface to a broad range of components from the modem, antenna, and system processor to the camera, display, sensors, and other peripherals. MIPI specifications were designed from the ground up to minimize power while supporting high bandwidth and strict EMI requirements. Put simply, if a system requires the use of sensors, actuators, displays, cameras, advanced audio, or wireless communication interfaces, then it is highly likely that it can benefit from the use of MIPI specifications.

The Perceive Ergo processor is a great example of how to leverage the benefits of MIPI specifications for edge processor designs. Perceive chose to use the MIPI D-PHY and MIPI CSI-2 specifications, not only because of the power efficiency and low EMI that they provide, but also because it is the most widely adopted specification across the industry for this type of applications, with an extensive ecosystem supporting it.

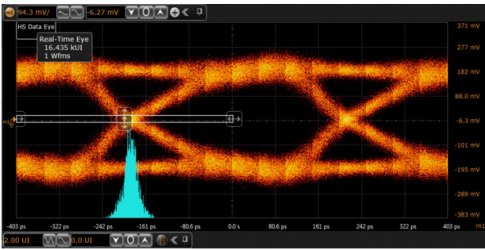

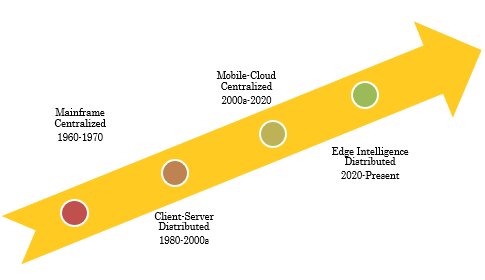

Mixel MIPI D-PHY

Mixel provided Perceive the MIPI D-PHY CSI-2 TX and Mixel MIPI D-PHY CSI-2 RX IP. Both IPs were silicon proven in an FDSOI process before being ported to 22FDX. Perceive chose to go with the FDSOI process because it provides the right mix of low power and low cost to achieve high performance versus the more costly FinFET processes. In addition, FDSOI also provides more flexibility, due to the programmability of body bias, resulting in higher performance and potential reduction in power and area. These benefits make FDSOI one of the most widely adopted for IoT devices. On the Receiver side, Mixel delivered 2 different area optimized RX configurations of the CSI-2 D-PHY: a 2-lane and a 4-lane version. They both support MIPI D-PHY v2.1 which is backward compatible with v1.2 and v1.1. Both configurations run at up to 2.5Gbps/lane and support a low power mode running at up to 80Mbps/lane. For the transmitter side, Mixel provided Perceive an area optimized 4-lane CSI-2 TX D-PHY. This IP also supports MIPI D-PHY v2.1 and has a high-speed transmit mode running at 2.5Gbps/lane. This transmitter is used to support the tunneling function. In the image below you see the eye diagrams of the TX IP running at 1.5Gbps/lane and 2.5Gbps/lane. Perceive achieved first-time success with the Mixel IPs and is now in production

Figure 6: Mixel D-PHY TX eye diagram at 1.5Gbps/lane

Figure 7: Mixel D-PHY TX eye diagram at 2.5Gbps/lane

Conclusion

IoT connected systems today demand a balance between cloud and edge processing to optimize system performance. You simply cannot beat the processing power of the cloud data centers but for applications that require real-time decision-making, edge provides the lowest latency. To support edge devices, you need a processor that is capable of machine learning. The Perceive Ergo AI processor enables inference processing at the edge to make IoT devices smarter, achieve lower latency, and improve battery life and security. Edge processor designs also demand an interface that is compatible with a broad range of IoT peripherals. MIPI CSI-2 is the de-facto standard for low power sensors and cameras and given that MIPI specifications were designed for low power, high bandwidth applications, they are a perfect match for IoT AI devices processing at the edge. By leveraging a differentiated silicon-proven MIPI CSI-2 enabled MIPI D-PHY from Mixel, Perceive was able to mitigate its risk, reduce its time to market, and provide its customers with a power efficient, highly competitive solution addressing the ever expanding and competitive AI edge processing market.

For information about Mixel’s IP portfolio, visit mixel.com/ip-cores.

Perceive and Ergo are either trademarks or registered trademarks of Perceive Corporation in the United States and/or other countries.