By Aditi Sharma, NXP

ABSTRACT

With the growing complexity of automotive subsystems, the need for multiple cores is increasing in an SoC. Multiple cores will access shared system resources all the time. When more than one core is accessing the same memory or any shared variable, in that condition there is a possibility that the output or the value of the shared variable is wrong due to race condition. Race conditions pose a serious threat to data integrity. Race conditions can be avoided if proper synchronization methods are used. One such synchronization method is using Exclusive Load and Store on a shared memory resource.

Exclusive Load and Store instructions need Exclusive access monitors on target memory.

In this article, we will see the methods required to stress Exclusive access monitors at target memory to uncover any bug buried deep inside the design.

I- INTRODUCTION TO EXCLUSIVE ACCESS

The exclusive access mechanism enables the implementation of semaphore-type operations without requiring the bus to remain locked to a particular master for the duration of the operation. The advantage of exclusive access is that semaphore-type operations do not impact either the critical bus access latency or the maximum achievable bandwidth[2].

The basic process of exclusive access is:

a) Controller_1 performs an exclusive read from a shared address location.

b) Controller_1 then immediately attempts to complete the exclusive operation by performing an exclusive write to the same shared address location.

c) Exclusive write access of Controller_1 is considered :

- Successful if EXOKAY response is received. This will occur only if no other controller has done normal or exclusive write to that location between the read and write accesses.

- Failed if OKAY response is received. It means another controller has done normal or exclusive write to the shared location between read and write accesses. In this case, the shared address location is not updated by Controller_1.

When Controller_1 performs an exclusive read, an exclusive access monitor is allocated. Exclusive access monitor contains information regarding Transaction ID, address to be monitored and the number of bytes to monitor. Now different scenarios can occur if target memory has at least two monitors out of which five scenarios are listed below:

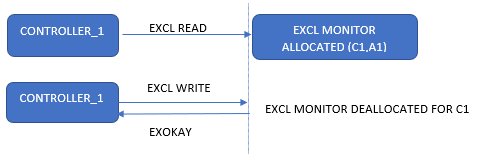

i) Controller_1(C1) performs an exclusive read at shared address A1 leading to the allocation of Exclusive access monitor. Controller_1 then immediately issues exclusive write on the same shared address A1. No other controller has written in between the Exclusive read and write operations of Controller_1. Exclusive access monitor is deallocated on Exclusive write and EXOKAY response is sent signaling Controller_1 about a successful write.

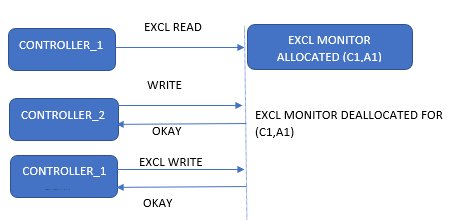

ii) Controller_1(C1) performs an Exclusive read on shared address A1.Controller_2(C2) then performs exclusive read and write on the same location. Once the exclusive write of Controller_2 succeeds with EXOKAY, both Controller_1 and Controller_2 monitors are deallocated immediately. Controller_1 performs an Exclusive write and receives an OKAY response.

Controller_1 exclusive write will be unsuccessful but Controller_2 exclusive write will be successful.

iii) Controller_1(C1) did an Exclusive read on shared address A1.Controller_2(C2) did normal write at the same location. Controller_1 monitor is deallocated immediately. Controller_1 did an Exclusive write in the end. Now both Controller_2 and Controller_1 will receive an OKAY response.Controller_1 exclusive write will be unsuccessful but Controller_2 normal write will be successful.

iv) Controller_1(C1) did an Exclusive read on shared address A1.Controller_2 did an exclusive read on the same address A1. Controller_1 did an Exclusive write and received an EXOKAY response. Both Controller_1 and Controller_2 monitors are deallocated immediately. Controller_2 did an Exclusive Write and received an OKAY response.

Controller_1 exclusive write was successful butController_2 exclusive write was unsuccessful.

v) Controller_1(C1) did an Exclusive read on shared address A1. Controller_1(C1) did an Exclusive read on a different address A2 within the same target memory. Since only one monitor can be associated with one transaction ID at target memory at any particular time, the first monitor will be deallocated immediately. Now, Controller_1 Exclusive write on address A2 will receive an EXOKAY response, and Exclusive write on address A1 will receive an OKAY response.

II.VALIDATING THE CORRECT MONITORS

A system must implement two sets of monitors to support synchronization between controllers, local and global. A Load-Exclusive operation updates all the monitors to an exclusive state[1].

Local monitors

Each controller that supports exclusive access has a local monitor. Exclusive accesses to memory locations marked as Non-shareable are checked only against this local monitor. Exclusive accesses to memory locations marked as Shareable are checked against both local and global monitors.

Global monitors

A global monitor tracks exclusive accesses to memory regions marked as Shareable. Global monitors are implemented at Target memory. Any Store-Exclusive operation targeting Shareable memory must check its local and global monitors to determine whether it can update memory. There are usually multiple Global monitors present.

If interconnect is Cache coherent, it can also have exclusive access monitors. Exclusive access to the cacheable coherent region may return from interconnect monitors themselves.

If Global monitors at the Target memory end need to be validated, it is very important to keep the cache disabled and memory region shareable in memory attributes of controller MPU/MMU settings.

III. VALIDATING METHODOLOGY

i) Eliminating setup issues

Multicore software environments can be complex needing programming in many places. To make sure that all memory attributes and cacheability settings are correct for all cores, it’s good to run a basic test involving two cores to check the functionality of global exclusive access monitors. It’s best to run Scenario ii) as mentioned in Section I. If the response is as expected, the setup issue is eliminated and we can move forward to do further stress testing on exclusive access monitors.

ii) Multiple masters accessing Different locations

As discussed earlier, there can be an ‘n’ number of Global monitors on target memory. ‘n’ monitors can be allocated if‘n’ masters do parallel exclusive reads on different target memory locations. An important point to note here is that the same master can’t allocate ‘n’ monitors at the same time. So, we can validate all ‘n’ monitors only if we have at least ‘n’ masters capable of doing exclusive access in SoC.

The following code runs on all ‘n’ cores for different memory locations:

EXCL READ: Ldrex <in_register> <memory_location_n>

WAIT: Main Core writes the key and secondary cores wait for the key

This wait is there to make sure that all cores have run an Exclusive readbefore any core attempts an Exclusive write

EXCL WRITE: Strex <result_register><out_register> <memory_location_n>

At the end of EXCL WRITE, result_register will store the result for that core. 0 means EXOKAY and 1 means OKAY. <out_register> contains the value to be written after doing some arithmetic operation on the value received in <in_register>.

All ‘n’ cores should receive EXOKAY in the case of ‘n’ global monitors. If any of the cores received OKAY, there can be an issue in the number of global monitors or interconnect monitors.

iii) Multiple masters accessing the same location

This test is designed to validate the real use case scenario where ‘n’ cores are trying to do read modify write on the same memory location in a loop. If any race condition exists, it should be captured by this test. At the end of the test, the final value of the shared location should be the number of cores* the number of loops. If the value is less than this number, a race condition exists within the system. Its needs debugging whether it’s the problem of the target memory controller or interconnect.

The following code runs on all ‘n’ cores for the same memory location:

As the number of cores increases, the number of attempts per core will also increase. The number of attempts should be comparable for all cores with the same speed. If the difference is huge, it may be possible that some cores are incurring high latencies in the path. It may need debugging.

Below is an example showing how the number of attempts increases with the increasing core count for Core_0.

REFERENCES

[1] ARM Synchronization Primitives Development Article

[2] AMBA AXI Protocol Specification (alvb.in)