By Laurent Arditi, Paul Sargent, Thomas Aird (Codasip)

Abstract

The openness of RISC-V allows customizing and extending the architecture and microarchitecture of a RISC-V based core to meet specific requirements. This appetite for more design freedom is also shifting the verification responsibility to a growing community of developers. Processor verification, however, is never easy. The very novelty and flexibility of the new specification results in new functionality that inadvertently creates specification and design bugs.

During the development of an average complexity RISC-V processor core, you can discover hundreds or even thousands of bugs. As you introduce more advanced features, you introduce new bugs that vary in complexity. Certain types of bugs are too complex for simulation to find them. You must augment your RTL verification methods by adding formal verification. From corner cases to hidden bugs, formal verification allows you to exhaustively explore all states within a reasonable amount of processing time.

In this article, we go through a formal-based, easy-to-deploy RISC-V processor verification application. We show how, together with a RISC-V ISA golden model and RISC-V compliance automatically generated checks, we can efficiently target bugs that would be out of reach for simulation. By bringing a high degree of automation through a dedicated set of assertion templates for each instruction, this approach removes the need for devising assertions manually, thus improving the productivity of your formal verification team.

1. The context

Codasip L31 is a 32-bit mid-range RISC-V embedded core for microcontroller applications. As a versatile, low-power, general-purpose CPU, it balances performance and power consumption. From IoT devices to industrial and automotive control, or as a deeply embedded core in a larger system, it brings local processing capabilities into a compact area. Designed in CodAL language with Codasip Studio, this fully customizable core includes classic extensions and features and needed efficient and thorough verification.

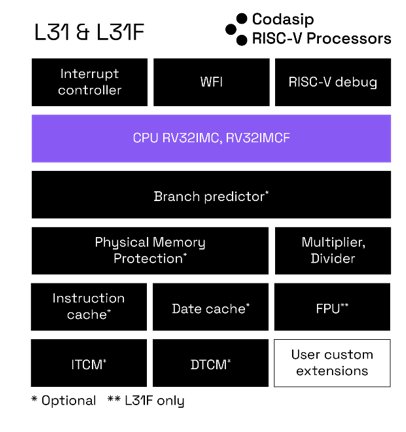

Figure 1: Codasip L31 block diagram (source: Codasip)

Table 1: Codasip L31 features

| Features | Description |

| Instruction Set Architecture (ISA) | RV32 I/M/C/F/B |

| Pipeline | 3-stage, in order |

| Branch predictor | Optional, increased single-thread performance |

| Multiplier | Parallel implementation, single-cycle multiplication |

| Divider | Sequential implementation |

| Memory protection |

Machine and User privilege modes |

| Tightly-Coupled Memory (TCM) | • Instruction and data TCMs • Customizable size up to 2MB AHB-Lite TCM secondary port |

| Interfaces | 32-bit AHB-Lite interfaces for fetch and data (AXI-Lite with caches) |

| Floating-Point Unit (FPU) | Optional, single precision |

| Debug |

|

| Interrupts |

|

2. Our verification approach

Processor verification requires strategy, diligence, and completeness.

Verifying a processor means taking uncertainty into account. What software will run on the end product? What will be the use cases? What asynchronous events could occur? These unknowns mean significantly opening the verification scope. However, it is impossible to cover the entire processor state space, and it is not something to aim for.

Processor quality must be ensured while making the best use of time and resources. Doing smart processor verification means finding relevant bugs efficiently and as early as possible in the product development. At a high level, our verification approach is based on the following:

- Verification is done during processor development, in collaboration with design teams.

- Verification is a combination of all industry standard techniques. Using multiple techniques allows you to maximize the potential of each of them and efficiently cover as many corner cases as possible.

- Verification is an ongoing science. What works is a combination of techniques that evolve as processors become more complex.

When verifying our L31 core, the idea was to make simulation and formal verification complement each other.

2.1. The benefits and purpose of simulation

Simulation is a de-facto must-have. It allows us to verify a design at two levels:

- Top-level, mostly to ensure that the design conforms to its specification (the ISA for a CPU) in the most common situations.

- Block-level, to ensure that the microarchitecture is designed as it was intended to be. However, it is difficult to link these checks with high-level architecture specifications as this usually relies on directed-random test generation, hence being able to reach tricky and uncommon situations.

Top-level simulation usually does not stress the design as much as block-level simulation does. Therefore, overall verification of the design against the ISA is achieved, but with a low level of stress.

2.2 The benefits and purpose of formal verification

Formal verification uses mathematical techniques to provide definitive answers about a design to questions written as assertions.

Formal verification tools perform an exhaustive analysis of the combination of the assertions and the design. There is no need to specify any stimuli, except to specify some illegal cases to avoid false-failures. Tools can provide a "proven" answer, which is exhaustive, or a "fail" answer, also producing a waveform showing a stimulus proving the assertion to be false. On large and complex designs, tools sometimes only provide a bounded proof, meaning that no failure scenarios exist from reset up to a specific number of cycles. Different techniques exist to either increase that cycle number, or get a “proven” or “fail” answer.

Formal verification is used in the following cases:

- To fully verify a block, potentially removing the need for any simulation. Due to the computational complexity of formal, formal-only sign-off is limited to small modules.

- To verify a block, even a large one, in addition to simulation because formal is able to find bugs in corner cases that random simulation can reach only “by luck”, with a very low probability.

- To handle some verification tasks where simulation is not adequate, for example clock-gating, X-propagation, CDC, equivalence checking, etc.

- To help investigate known bugs for which debugging information is lacking, and to qualify potential design fixes.

- To classify and identify bugs so as to learn and improve testbenches/simulation thanks to findings in formal.

- To potentially help simulation, filling holes in coverage.

3. The solution: a formal-based approach for efficient RISC-V processor verification

We decided to use the Siemens EDA Processor Verification App to efficiently verify our L31 RISC-V core. The purpose of this tool is to ensure that a processor design at RTL level correctly and exhaustively implements the Instruction Set Architecture (ISA) specification.

3.1. An end-to-end approach

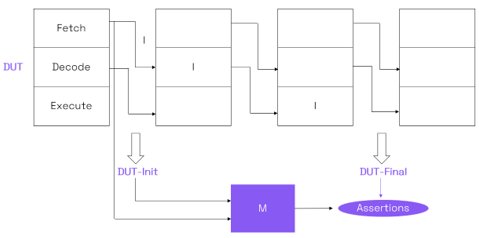

1. From a high-level and reputed “golden model”, the tool generates:

- A single-cycle execution model of the ISA, in Verilog.

- A set of assertions which check that the Design Under Test (DUT) and the Model (M) are functionally equivalent at architecture level.

Note: this is not doing any formal equivalence checking.

2. When a new Instruction (I) is fetched in the DUT, the architectural state (DUT-init) is captured.

3. The instruction is executed in the pipeline.

4. Another architectural state (DUT-final) is captured.

5. M is fed with DUT-init and I, and computes a new M-final state.

6. Assertions check that the resources in M-final and DUT-final have the same values.

Figure 2: End-to-end verification flow for the 3-stage L31 core (when the verified instruction I is neither stalled nor flushed)

This end-to-end verification approach is achievable with reasonable efforts on blocks which are smaller and simpler than a full CPU, for example a data cache. It is possible to write end-to-end assertions on a cache to verify that the data written at a specific address is correctly read from that same address. This uses well-known formal verification techniques such as a scoreboard.

For a CPU however, writing such an assertion is not doable manually. It would need to specify the semantics of each instruction, crossed with all execution modes. This is usually not done at all. The formal verification of a CPU is split into smaller pieces, however verifying that all pieces together correctly implement the ISA is still missing.

Using the proposed approach means that we were able to verify the full L31 core at once, without having to write any complex assertions. As described above, the golden model and the checking assertions are automatically generated.

This approach is at the same time highly configurable and automatic, especially for a RISC-V CPU such as L31:

- The user can specify the high-level RISC-V parameters and extensions that the design implements.

- The tool is able to automatically extract data from the design, such as linking the architectural registers with the actual flops.

- The tool allows adding customization such as new instructions to verify (with the ability for the user to “extend” the golden model).

Finally, the fact that the golden model is not developed by Codasip (except for some customized parts), provides extra guarantee, which is important from a verification independence point of view.

3.2. How we used the Siemens EDA Processor Verification App

Before we could use the tool, we needed a formal verification setup for the L31 RISC-V core. This setup is similar to the one used for formally verifying standard assertions using an Assertion-Based Verification (ABV) approach with abstractions, constraints, etc.

The tool allows you to verify specific classes of instructions, and to enable or disable some resource checks. With that, we could start the verification with a reduced space consisting of:

- Only the simplest instructions, for example only the integer arithmetic and logic instructions.

- Only the simplest (but most important) checks. For example the update of the general-purpose registers. Other checks that can be added later on are the update of the system registers (CSR), or Program Counter (PC), and memory accesses.

- Only the main functional mode: no interrupts, aborts, exceptions or debug accesses.

These three orthogonal constraints can be relaxed one by one depending on the criticality of the microarchitecture features.

Classic formal verification techniques can be used to help get results on the checker assertions: abstractions, design reductions, case splitting, invariant generation, semi-formal bug hunting, etc.

3.3. The results

This formal-based approach allowed us to find corner cases and gave us insight to improve our simulation and testbenches. This verification work was completed towards the end of the project, after other simulation-based verification flows were running without finding new bugs, which allowed us to find genuine and non-trivial bugs.

Let’s focus on three of them, found with the Siemens EDA Processor Verification App on L31.

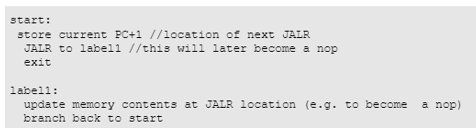

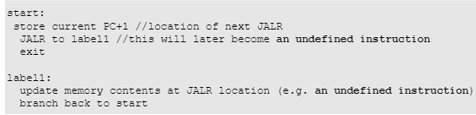

3.3.1. Branch predictor corruption

With this bug, returning to a PC address that previously held a jump/branch instruction causes the branch predictor to falsely predict the jump to another address.

This corner case is found when the following conditions are met:

Self-modifying code

An extremely rare version of this bug also occurs when an undefined instruction is added (exception on a new instruction):

This bug was found by an assertion checking the PC value.

The immediate consequence of this bug is a branch that is wrongly taken, causing the wrong execution of the code. This bug was fixed by correctly flushing both the branch prediction and the pipeline.

Finding this bug with the Siemens EDA Processor Verification App took 8 cycles and 15 minutes of runtime. Reproducing it in simulation requires a random generator that supports self-modifying code that returns exactly to the same address and that modifies that address from a branch to another type of instruction. In other words, it is highly improbable that a random generator could do that. Only a directed sequence could, knowing the details of the bug.

3.4. Multiple executions of the same instruction

With this bug, the NPC (next PC) unit stall is raised, which causes the same address to be fetched multiple times. Each instruction executes and retires.

This corner case is found when the following conditions are met:

- The core is configured with TCM.

- Specific delays are seen on the fetch bus.

- Specific stalls are seen inside the pipeline.

The immediate consequence of this bug is a stall that is not handled properly in the rest of the pipeline, causing multiple executions of the same instruction. This bug was fixed by correctly handling stalls in the rest of the pipeline.

Finding this bug with the Siemens EDA Processor Verification App took 5 cycles and 10 minutes of runtime. Reproducing it in simulation requires a random pattern of random delays and stalls, but also quite some “luck” to reproduce this particular sequence.

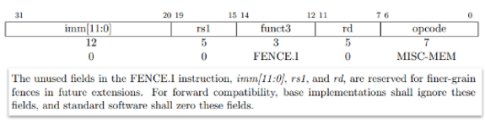

3.5 Legal FENCE.I instruction considered illegal

With this bug, a fence instruction is handled by the CSR unit. If the instruction bits corresponding to the csr addr bits of a CSR operation (bits [31:20]) match certain CSR registers (such as debug, counters), then the fence can be falsely marked as illegal.

This corner case is found when the following conditions are met:

- There are random bits in imm[11:0]/rs1/rd.

- These bits match some other illegal instructions.

The immediate consequence of this bug is an illegal instruction exception that is wrongly raised. This bug was fixed by correctly decoding the full instruction on each part of the pipeline.

Finding this bug with the Siemens EDA Processor Verification App took only 8 cycles and 5 minutes of runtime. Reproducing it in simulation is tricky because compilers will only create the simplest implementation of binary encoding. It requires either a special compiler to create the variations of legal encodings or a special directed test with various encodings.

4 Benefits/conclusion

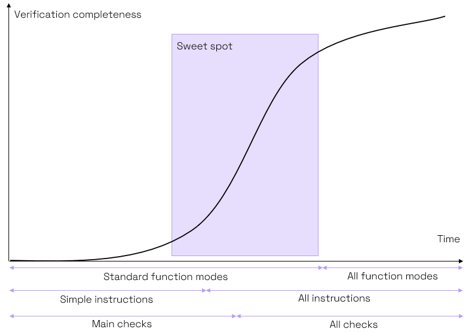

Applying this methodology provided a productivity gain for the verification team. Efficiency increased at key phases of the project. Although building a correct setup required effort at the start, progress accelerated as we added new instruction classes and new checkers. This “sweet spot” was where we found the majority of issues, with the rate slowing down as we relaxed the constraints to allow the tool to explore more esoteric modes of operation.

Figure 3: Sweet spot for best efficiency in verifying the L31 RISC-V core (source: Codasip)

Overall, using the Siemens EDA Processor Verification App was efficient because the overall work required to verify the entire CPU using this tool was much lower than trying to reach a similar validation quality manually. Of a total of 30 bugs, 15 were found with formal verification.

Table 2: Simulation effort vs. formal verification effort

| Verification technique | Simulation | Formal verification |

| Verification infrastructure | Testbench, random instruction generator, checker | Assertions provided by Siemens EDA Processor Verification App |

| Development time | Person-years of efforts | Very limited (only needed to customize the generated set-up) |

| Run time | 1000’s of 1000’s of tests/seeds 1000’s of 1000’s simulation hours | Full proof in 2 hours of run time (best case) |

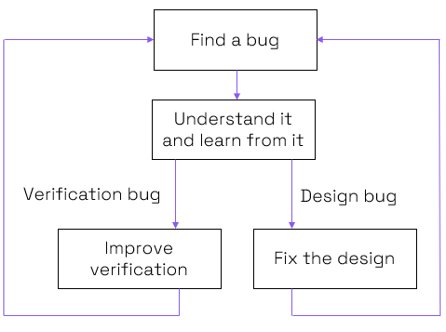

Simulation and formal verification are very powerful when combined to reach high quality and allow us to feed the virtuous circle of verification improvement.

Figure 4: Reaching best-in-class quality through continuous improvement (source: Codasip)

The implementation of this solution for L31, a 3-stage microcontroller, proved to be successful and is now deployed to our next-generation RISC-V cores, both in the embedded and application spaces. With the knowledge built by using the Siemens EDA Processor Verification App on L31, the effort to get a robust set-up is reduced for application cores, even if they are more complex. Next steps include further work to understand how this tool can apply to super-scalar and out-of-order cores, and supporting new RISC-V extensions as they come.

Additional readings

- Efficient verification of RISC-V processors - technical paper

- Building a Swiss cheese model approach for processor verification - blog post

About Codasip

Codasip is a processor solutions company which helps developers to differentiate their products. We are Europe’s leading RISC-V company with a global presence. Billions of chips already use our technology.

In today’s technology market, differentiation is everything. The difference between success and failure. And, in chip design, this difference is quite literally wafer thin. With increasing transistor costs, your developers can no longer rely on semiconductor scaling and legacy processors to achieve your goals. The only way forward is to implement custom compute with designs tailored to your applications.

We deliver custom compute through the combination of the open RISC-V ISA, Codasip Studio processor design automation and high-quality processor IP. Our innovative approach lets you easily customize and differentiate your designs. You can develop high-performing, and game-changing products that are truly transformational.