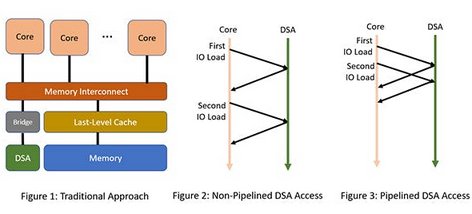

Domain-specific accelerators (DSAs) are becoming increasingly common in SoCs. A DSA provides higher performance per watt by optimizing the specialized function it implements. Examples of DSAs include compression/decompression units, random number generators and network packet processors. A DSA is typically connected to the core complex using a standard IO interconnect, such as an AXI bus (Figure 1).

RISC-V offers a unique opportunity to optimize high-bandwidth communication between cores and DSAs. Cores often issue fine-grain load and store instructions in the IO space to access DSA memory. The problem, however, is that these loads and stores to DSA memory might have side effects. For example, a load to a specific DSA memory address might trigger a network message as a side effect of the load. Typically, because of such side effects, loads and stores from a core to an IO device are required to be observed by the IO device in order. This is also known as point-to-point ordering.